A Deep State-Space Model Compression Method using Upper Bound on Output Error

Abstract

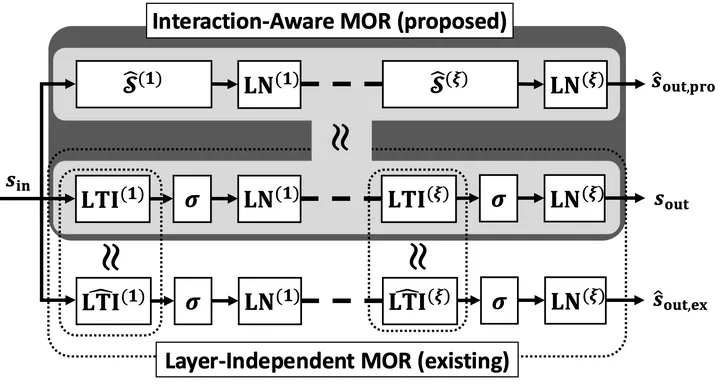

We study deep state-space models (Deep SSMs) that contain linear-quadratic-output (LQO) systems as internal blocks and present a compression method with a provable output error guarantee. We first derive an upper bound on the output error between two Deep SSMs and show that the bound can be expressed via the h2-error norms between the layerwise LQO systems, thereby providing a theoretical justification for existing model order reduction (MOR)-based compression. Building on this bound, we formulate an optimization problem in terms of the h2-error norm and develop a gradient-based MOR method. On the IMDb task from the Long Range Arena benchmark, we demonstrate that our compression method achieves strong performance. Moreover, unlike prior approaches, we reduce roughly 80% of trainable parameters without retraining, with only a 4-5% performance drop.

Type

Publication

preprint on arXiv