Compression Method for Deep Diagonal State Space Model Based on H2 Optimal Reduction

Abstract

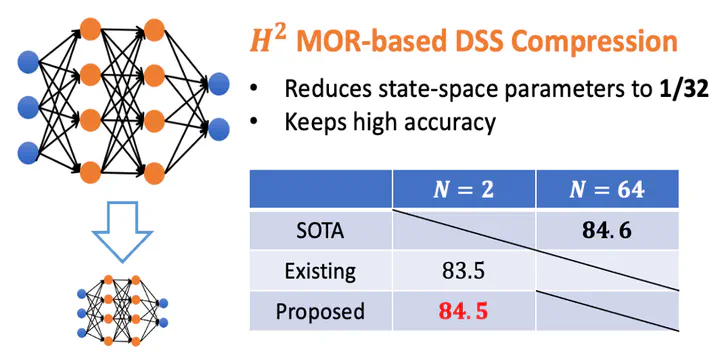

Deep learning models incorporating linear SSMs have gained attention for capturing long-range dependencies in sequential data. However, their large parameter sizes pose challenges for deployment on resource-constrained devices. In this study, we propose an efficient parameter reduction method for these models by applying H2 model order reduction techniques from control theory to their linear SSM components. In experiments, the LRA benchmark results show that the model compression based on our proposed method outperforms an existing method using the Balanced Truncation, while successfully reducing the number of parameters in the SSMs to 1/32 without sacrificing the performance of the original models.

Type

Publication

IEEE Control Systems Letters, Vol. 9, pp. 2043–2048